On Wednesday, Meta announced an pork up to its dispute-of-the-artwork immense language mannequin, Llama 3.2, and it doesn’t excellent tell—it sees.

Extra animated, some versions can squeeze into your smartphone without dropping quality, meaning you’ll doubtlessly score non-public local AI interactions, apps and customizations without sending your knowledge to third party servers.

Unveiled Wednesday all over Meta Connect, Llama 3.2 is obtainable in four flavors, every packing a special punch. The heavyweight contenders—11B and 90B parameter items—flex their muscle groups with both text and image processing capabilities.

They’ll care for complex duties much like inspecting charts, captioning photography, and even pinpointing objects in photography per natural language descriptions.

Llama 3.2 arrived the identical week as Allen Institute’s Molmo, which claimed to be the acceptable birth-source multimodal vision LLM in synthetic benchmarks, performing in our assessments on par with GPT-4o, Claude 3.5 Sonnet, and Reka Core.

Zuck’s firm additionally equipped two unique flyweight champions: a pair of 1B and 3B parameter items designed for efficiency, toddle, and cramped nonetheless repetitive duties that don’t require too worthy computation.

These itsy-bitsy items are multilingual text maestros with a knack for “tool-calling,” meaning they are going to combine better with programming instruments. Despite their diminutive dimension, they boast a audacious 128K token context window—the identical as GPT4o and various highly effective items—making them excellent for on-instrument summarization, instruction following, and rewriting duties.

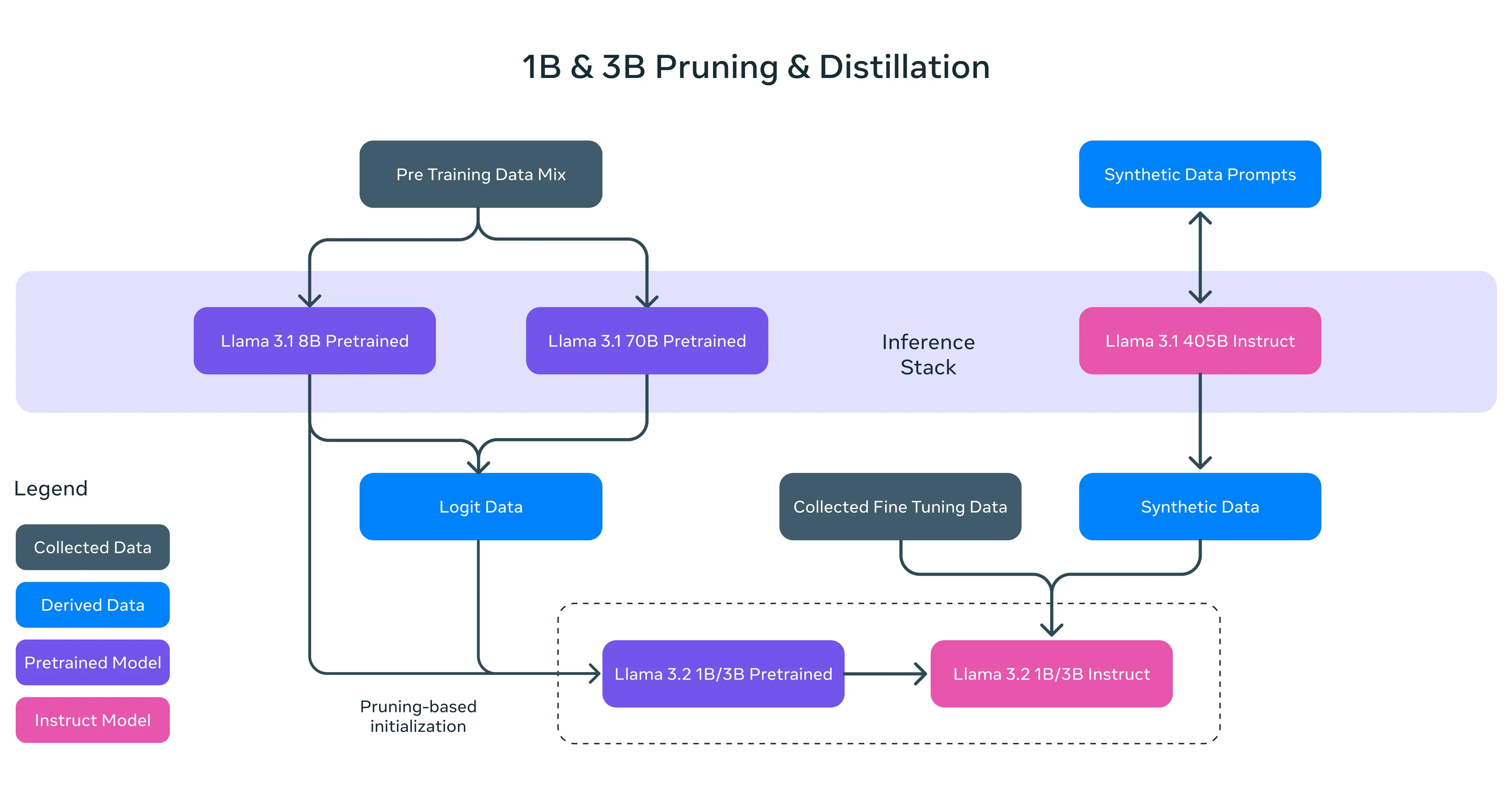

Meta’s engineering team pulled off some excessive digital gymnastics to operate this happen. First, they feeble structured pruning to clear the pointless knowledge from larger items, then employed knowledge distillation—transferring knowledge from immense items to smaller ones—to squeeze in extra smarts.

The final consequence changed into as soon as a space of compact items that outperformed rival competitors of their weight class, besting items including Google’s Gemma 2 2.6B and Microsoft’s Phi-2 2.7B on assorted benchmarks.

Meta is additionally working laborious to raise on-instrument AI. They’ve forged alliances with hardware titans Qualcomm, MediaTek, and Arm to guarantee Llama 3.2 plays good with cell chips from day one. Cloud computing giants don’t appear to be skipped over either—AWS, Google Cloud, Microsoft Azure, and a bunch of others are offering instantaneous access to the unique items on their platforms.

Beneath the hood, Llama 3.2’s vision capabilities come from luminous architectural tweaking. Meta’s engineers baked in adapter weights onto the fresh language mannequin, growing a bridge between pre-knowledgeable image encoders and the text-processing core.

In assorted phrases, the mannequin’s vision capabilities don’t come on the expense of its text processing competence, so customers can inquire of identical or better text results as compared to Llama 3.1.

The Llama 3.2 originate is Begin Offer—on the very least by Meta’s requirements. Meta is making the items on hand for download on Llama.com and Hugging Face, as effectively as through their intensive companion ecosystem.

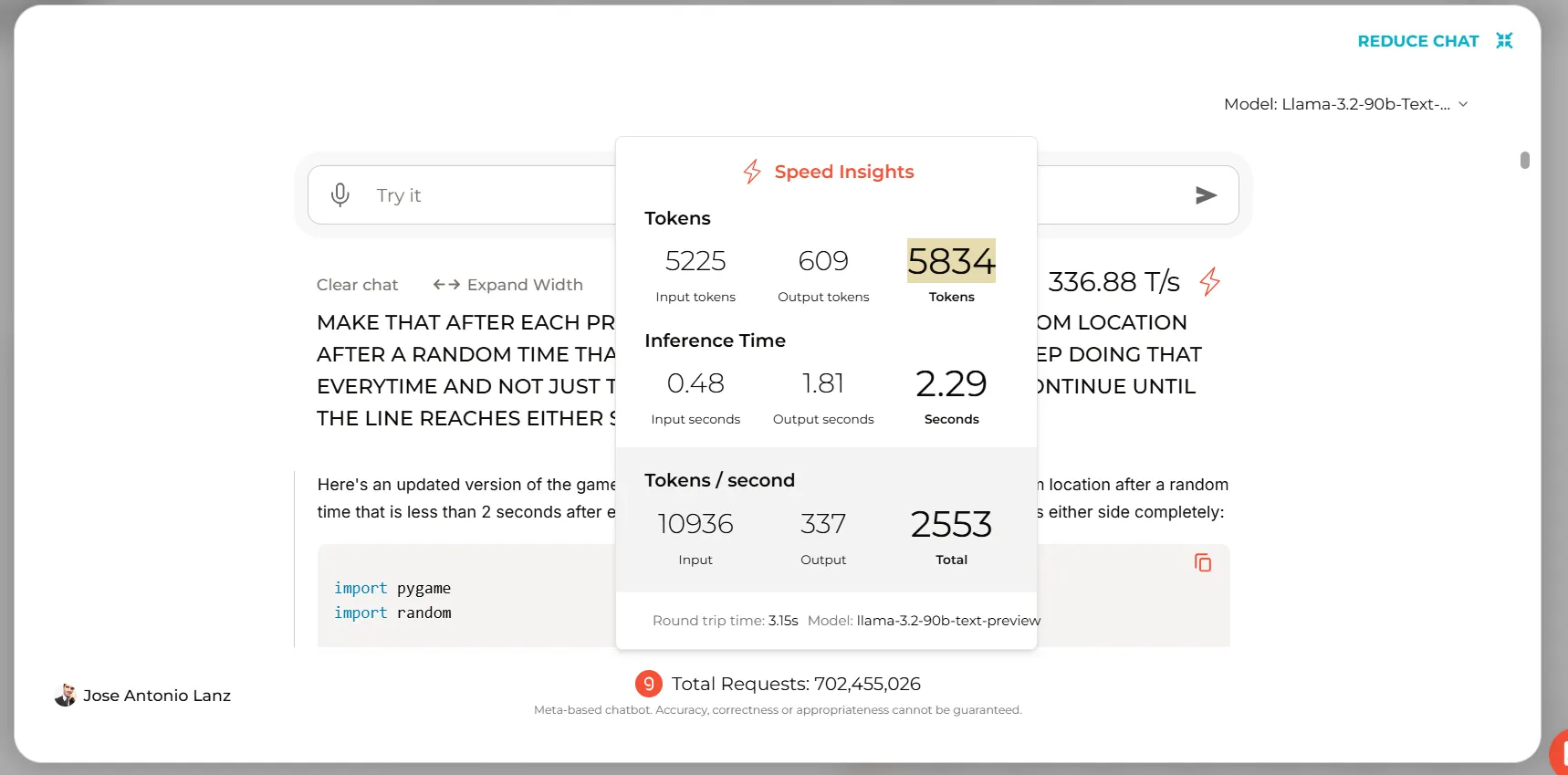

These in working it on the cloud can expend their possess Google Collab Pocket book or expend Groq for text-essentially essentially based interactions, generating almost 5000 tokens in no longer up to 3 seconds.

Driving the Llama

We build aside Llama 3.2 through its paces, swiftly checking out its capabilities across assorted duties.

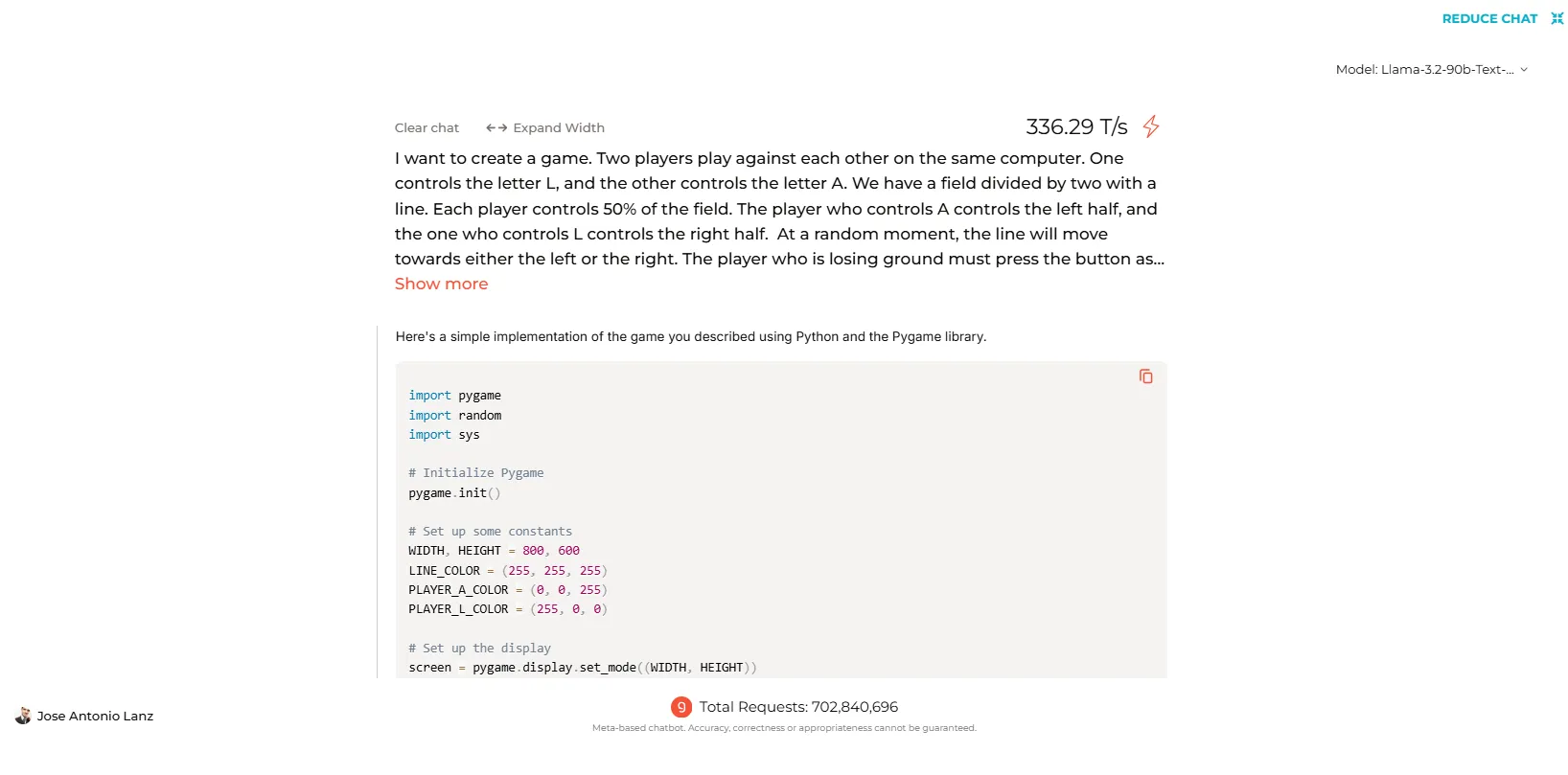

In text-essentially essentially based interactions, the mannequin performs on par with its predecessors. Nonetheless, its coding abilities yielded mixed results.

When tested on Groq’s platform, Llama 3.2 efficiently generated code for widespread video games and straightforward programs. Yet, the smaller 70B mannequin stumbled when asked to originate purposeful code for a custom sport we devised. The extra highly effective 90B, on the opposite hand, changed into as soon as worthy extra efficient and generated a purposeful sport on the first strive.

You might perhaps perhaps seek for the fleshy code generated by Llama-3.2 and your total assorted items we tested by clicking on this link.

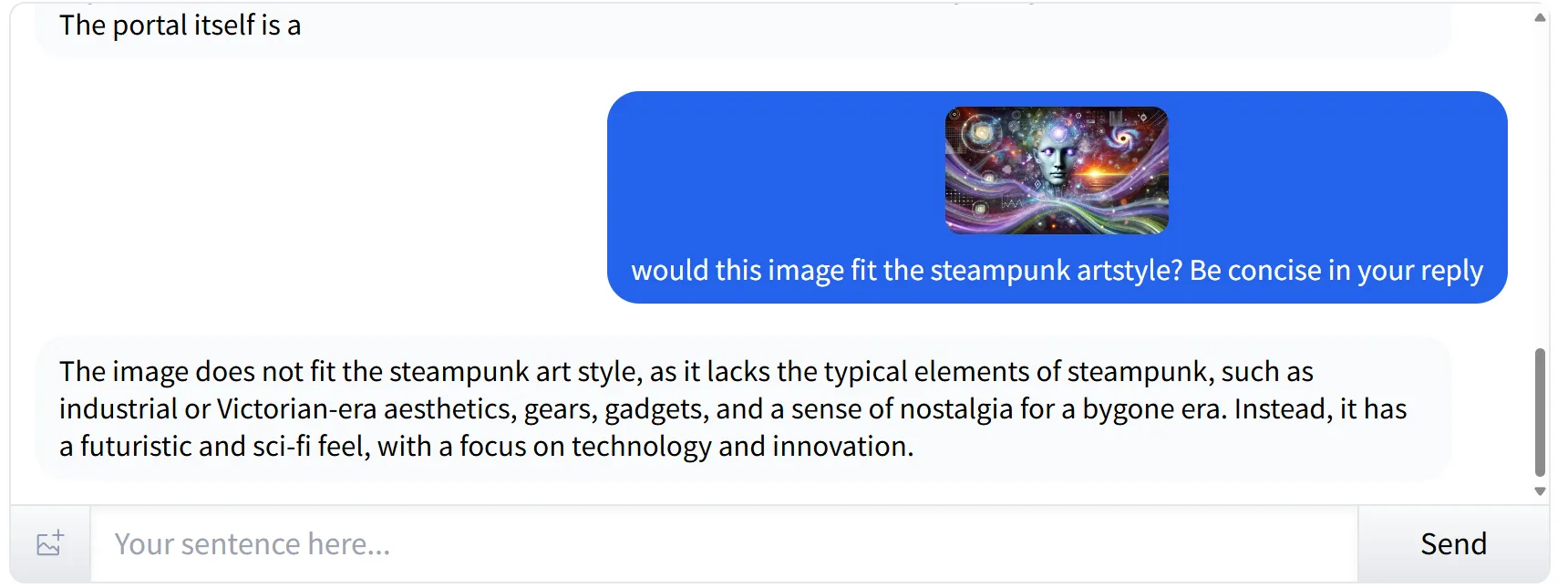

Identifying kinds and subjective elements in photography

Llama 3.2 excels at figuring out subjective elements in photography. When equipped with a futuristic, cyberpunk-model image and asked if it match the steampunk horny, the mannequin precisely identified the model and its elements. It equipped a ample clarification, noting that the image did not align with steampunk as a result of the absence of key elements related with that genre.

Chart Diagnosis (and SD image recognition)

Chart evaluation is one other solid swimsuit for Llama 3.2, though it does require high-resolution photography for optimal performance. When we input a screenshot containing a chart—one which assorted items love Molmo or Reka could perhaps make clear—Llama’s vision capabilities faltered. The mannequin apologized, explaining that it couldn’t learn the letters smartly as a result of the image quality.

Text in Image Identification

Whereas Llama 3.2 struggled with itsy-bitsy text in our chart, it performed flawlessly when reading text in larger photography. We showed it a presentation scuttle introducing a person, and the mannequin efficiently understood the context, distinguishing between the title and job position without any errors.

Verdict

Total, Llama 3.2 is a limiteless enchancment over its old era and is a immense addition to the birth-source AI industry. Its strengths are in image interpretation and immense-text recognition, with some areas for seemingly enchancment, in particular in processing lower-quality photography and tackling complex, custom coding duties.

The promise of on-instrument compatibility is additionally correct for the attain ahead for non-public and native AI duties and is a immense counterweight to terminate offers love Gemini Nano and Apple’s proprietary items.

Edited by Josh Quittner and Sebastian Sinclair