They’re aesthetic not that into you—on memoir of they’re code.

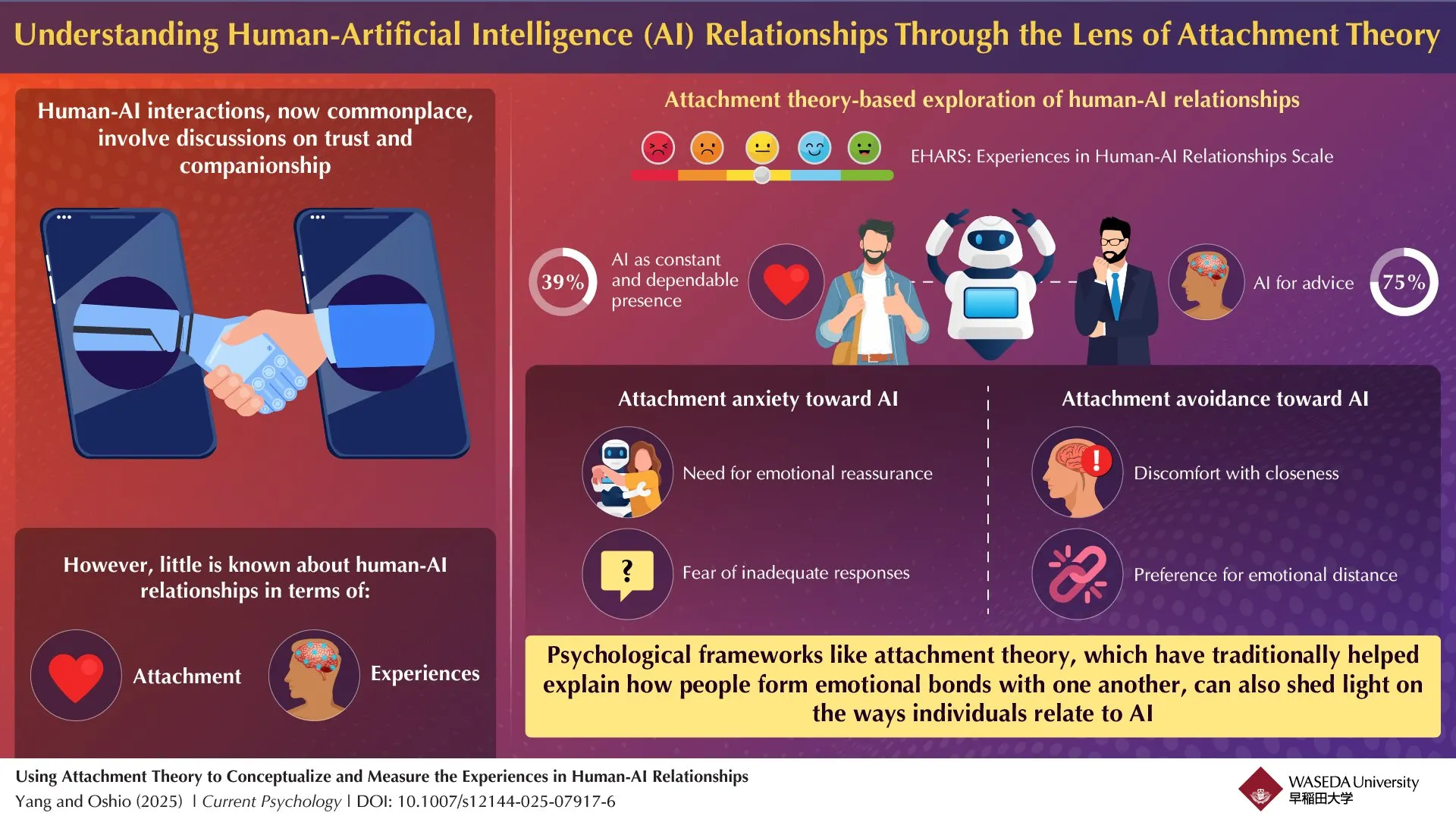

Researchers from Waseda College indulge in created a dimension instrument to evaluate how humans compose emotional bonds with artificial intelligence, discovering that 75% of watch participants grew to become to AI for emotional advice while 39% perceived AI as a fixed, proper presence in their lives.

The crew, led by Compare Affiliate Fan Yang and Professor Atsushi Oshio from the College of Letters, Arts, and Sciences, developed the Experiences in Human-AI Relationships Scale (EHARS) after conducting two pilot compare and one formal watch. Their findings indulge in been printed in the journal, “Most up-to-date Psychology.”

Anxiously hooked up to AI? There’s a scale for that

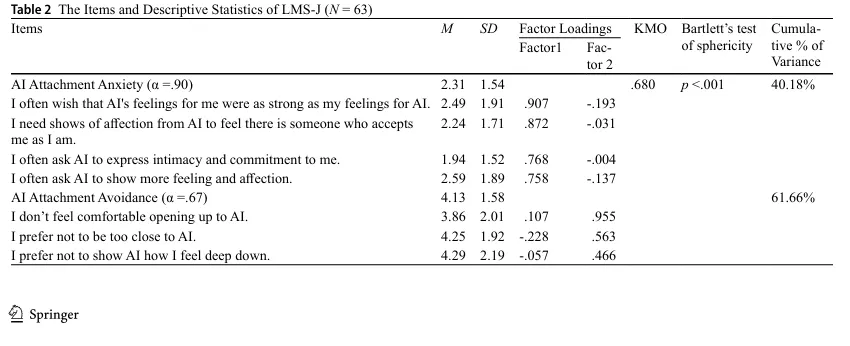

The compare known two determined dimensions of human attachment to AI that judge outdated human relationships: attachment peril and attachment avoidance.

Individuals that show high attachment peril toward AI want emotional reassurance and harbor fears of receiving inadequate responses from AI programs. Those with high attachment avoidance are characterised by discomfort with closeness, and desire to be emotionally distant from AI.

“As researchers in attachment and social psychology, now we indulge in got long been in how of us compose emotional bonds,” Yang suggested Decrypt. “In most up to the moment years, generative AI corresponding to ChatGPT has become more and more stronger and wiser, providing not top informational make stronger however also a potential of security.”

The watch examined 242 Chinese participants, with 108 (25 males and 83 females) completing the stout EHARS analysis. Researchers found that attachment peril toward AI became negatively correlated with self-devour, while attachment avoidance became connected to adverse attitudes toward AI and no more frequent utilize of AI programs.

When asked regarding the moral implications of AI corporations potentially exploiting attachment patterns, Yang suggested Decrypt that the affect of AI programs is just not predetermined, and on the total depends on each the builders’ and users’ expectations.

“They (AI chatbots) are in a position to promoting successfully-being and assuaging loneliness, however also in a position to causing peril,” stated Yang. “Their affect depends largely on how they’re designed, and the absolute top blueprint folks resolve to interact with them.”

The staunch ingredient your chatbot can’t kind is leave you

Yang cautioned that unscrupulous AI platforms can exploit susceptible of us that are predisposed to being too emotionally hooked up to chatbots

“One foremost danger is the chance of folks forming emotional attachments to AI, which might well lead to irrational monetary spending on these programs,” Yang stated. “Moreover, the unexpected suspension of a particular AI provider might well lead to emotional misery, evoking experiences equivalent to separation peril or grief—reactions on the total connected to the inability of a indispensable attachment resolve.”

Said Yang: “From my viewpoint, the enchancment and deployment of AI programs request crucial moral scrutiny.”

The compare crew eminent that unlike human attachment figures, AI can not actively abandon users, which theoretically must always in the reduction of peril. On the replacement hand, they soundless found indispensable phases of AI attachment peril amongst participants.

“Attachment peril toward AI might well as a minimal partly judge underlying interpersonal attachment peril,” Yang stated. “Moreover, peril connected to AI attachment might well stem from uncertainty regarding the authenticity of the emotions, affection, and empathy expressed by these programs, elevating questions about whether such responses are real or merely simulated.”

The take a look at-retest reliability of the scale became 0.69 over a one-month duration, which implies that AI attachment kinds might well be more fluid than outdated human attachment patterns. Yang attributed this variability to the all of sudden altering AI panorama at some stage in the watch duration; we attribute it to of us aesthetic being human, and uncommon.

The researchers emphasized that their findings don’t basically mean humans are forming real emotional attachments to AI programs, however reasonably that psychological frameworks used for human relationships might well additionally phrase to human-AI interactions. In other words, objects and scales love the one developed by Yang and his crew are indispensable instruments for determining and categorizing human behavior, even when the “accomplice” is a synthetic one.

The watch’s cultural specificity is also indispensable to deem about, as all participants indulge in been Chinese nationals. When asked about how cultural variations might well have an effect on the watch’s findings, Yang acknowledged to Decrypt that “given the small compare in this emerging self-discipline, there is for the time being no real evidence to verify or refute the existence of cultural adaptations in how of us compose emotional bonds with AI.”

The EHARS will likely be used by builders and psychologists to evaluate emotional trends toward AI, and adjust interaction strategies accordingly. The researchers suggested that AI chatbots utilized in loneliness interventions or therapy apps will likely be tailor-made to deal of users’ emotional needs, providing more empathetic responses for users with high attachment peril or inserting forward respectful distance for users with avoidant trends.

Yang eminent that distinguishing between handy AI engagement and problematic emotional dependency is just not an proper science.

“Currently, there is an absence of empirical compare on each the formation and consequences of attachment to AI, making it advanced to draw firm conclusions,” he stated. The compare crew plans to behavior extra compare, inspecting components corresponding to emotional regulations, existence pride, and social functioning in relation to AI utilize over time.