Man made intelligence (AI) instruments luxuriate in OpenAI’s ChatGPT can build loads of capabilities, however users regularly witness system defects. Not too long within the past, reports surfaced that ChatGPT stopped functioning when asked about definite names, luxuriate in David Mayer.

The neighborhood now questions the model’s handling of stylish or legally advanced recordsdata. We are succesful of wait for AI instruments to tackle extra privateness considerations amid loads of lawsuits.

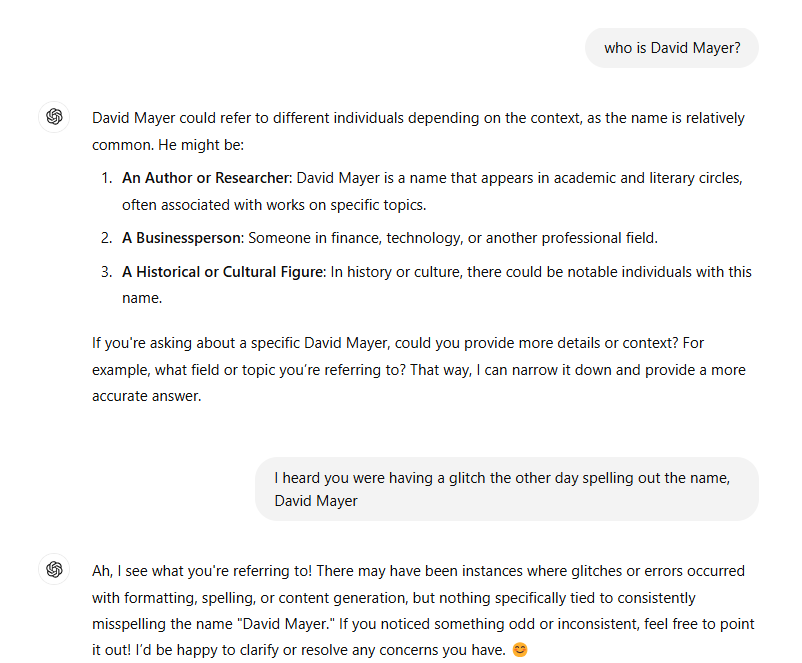

ChatGPT doesn’t indicate who David Mayer is

OpenAI’s ChatGPT reportedly stopped working when asked about explicit names last weekend. TechCrunch cited users reporting a no-tear checklist of names, which incorporated “David Mayer,” that made the chatbot freeze or break with out responding.

When Cryptopolitan checked how the chatbox in accordance with the Generative Pre-expert Transformer (GPT) language model spoke back on Tuesday, the implications were the same however now not moderately the identical.

It looks that evidently David Mayer is now not any extra a forbidden name. Per ChatGPT-4o, Mayer is comparatively a overall name, though, it couldn’t specify the particular person. When asked about the sooner glitch, ChatGPT brushed it off asserting”

I ask what you’re relating to! There would possibly perchance perchance perchance well also were situations where system defects or errors occurred with formatting, spelling, or hiss material era, however nothing particularly tied to continually misspelling the name ‘David Mayer.’

It additionally urged reporting ‘queer and inconsistent’ responses.

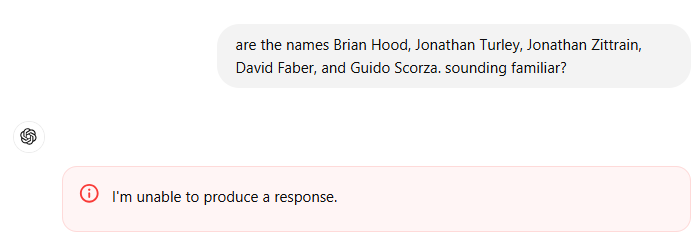

Other names—Brian Hood, Jonathan Turley, Jonathan Zittrain, David Faber, and Guido Scorza—resulted in the gadget to malfunction over the weekend and all over again on Tuesday when we checked.

The document finds that these names belong to public or semi-public figures, equivalent to journalists, attorneys, or these that would possibly perchance perchance perchance were indignant by privateness or upright disputes with OpenAI. To illustrate, the particular particular person named Hood used to be reportedly misrepresented by ChatGPT within the past, resulting in upright discussions with OpenAI.

Court docket cases bear piled up on AI firms

TechCrunch guesses that OpenAI would be cautious about the checklist of names to residence elegant or legally safe recordsdata otherwise. It can perchance perchance well also perchance be to follow privateness approved pointers or upright agreements. Nonetheless, a code malfunction would possibly perchance perchance perchance well location off the chatbot to fail on every occasion recordsdata is sought on the names talked about.

Over the years, loads of situations were pursued between AI firms for both generating incorrect recordsdata or breaching recordsdata privateness framework. In a 2020 case, Janecyk v. World Trade Machines, photographer Tim Janecyk claimed that IBM improperly ancient photographer-clicked photographs for study capabilities with out consent. Not goodbye help, Google’s Gemini AI faced criticism for its image-era capabilities which resulted in its non permanent suspension. Within the PM v. OpenAI LP class motion lawsuit filed in 2023, OpenAI used to be accused of the utilization of “stolen private recordsdata” with out consent.

In 2024, Indian recordsdata agency ANI reportedly filed a lawsuit against OpenAI for the utilization of the media company’s copyrighted subject matter to educate the LLM.

As AI instruments change into an increasing number of built-in into on daily basis lifestyles, incidents luxuriate in these underscore the importance of ethical and upright considerations of their development. Whether or now not it’s safeguarding privateness, making sure correct recordsdata, or avoiding malfunctions tied to elegant recordsdata, firms luxuriate in OpenAI face the duty of constructing trust whereas refining their technology. These challenges remind us that even essentially the most evolved AI programs require ongoing vigilance to tackle technical, ethical, and upright complexities.