>>>> gd2md-html alert: inline image link in generated supply and retailer photography to your server. NOTE: Photos in exported zip file from Google Doctors may per chance per chance additionally now not seem in the identical repeat as they map for your doc. Please verify the shots!

—–>

Must you’re now not a developer, then why within the sector would that you can own to bustle an inaugurate-supply AI mannequin for your keep aside computer?

It appears to be like there are a preference of factual reasons. And with free, inaugurate-supply objects getting better than ever—and straight forward to use, with minimal hardware requirements—now is a huge time to provide it a shot.

Listed below are just a few reasons why inaugurate-supply objects are better than paying $20 a month to ChatGPT, Perplexity, or Google:

- It’s free. No subscription bills.

- Your records stays for your machine.

- It actually works offline, no web required.

- You can additionally bid and customise your mannequin for specific use instances, corresponding to artistic writing or… wisely, the relaxation.

The barrier to entry has collapsed. Now there are actually just actual applications that enable users experiment with AI with out the total disaster of placing in libraries, dependencies, and plugins independently. Ethical about anybody with a pretty most original computer can map it: A mid-range notebook computer or desktop with 8GB of video memory can bustle surprisingly succesful objects, and some objects bustle on 6GB and even 4GB of VRAM. And for Apple, any M-series chip (from the previous couple of years) will be in a position to bustle optimized objects.

The instrument is free, the setup takes minutes, and the most intimidating step—picking which instrument to use—comes down to a straightforward quiz: Manufacture you desire clicking buttons or typing commands?

LM Studio vs. Ollama

Two platforms dominate the local AI dwelling, and they also method the yelp from reverse angles.

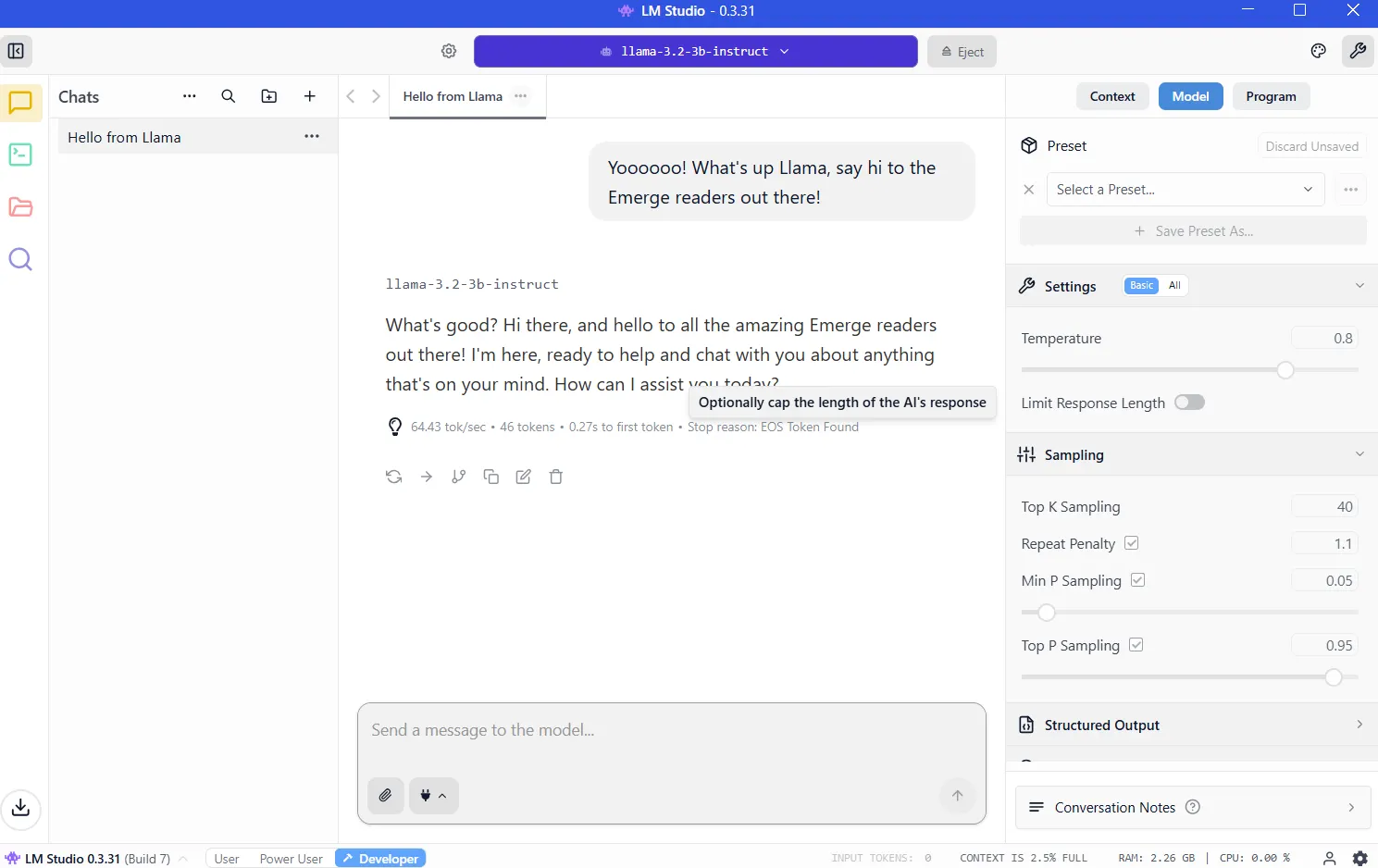

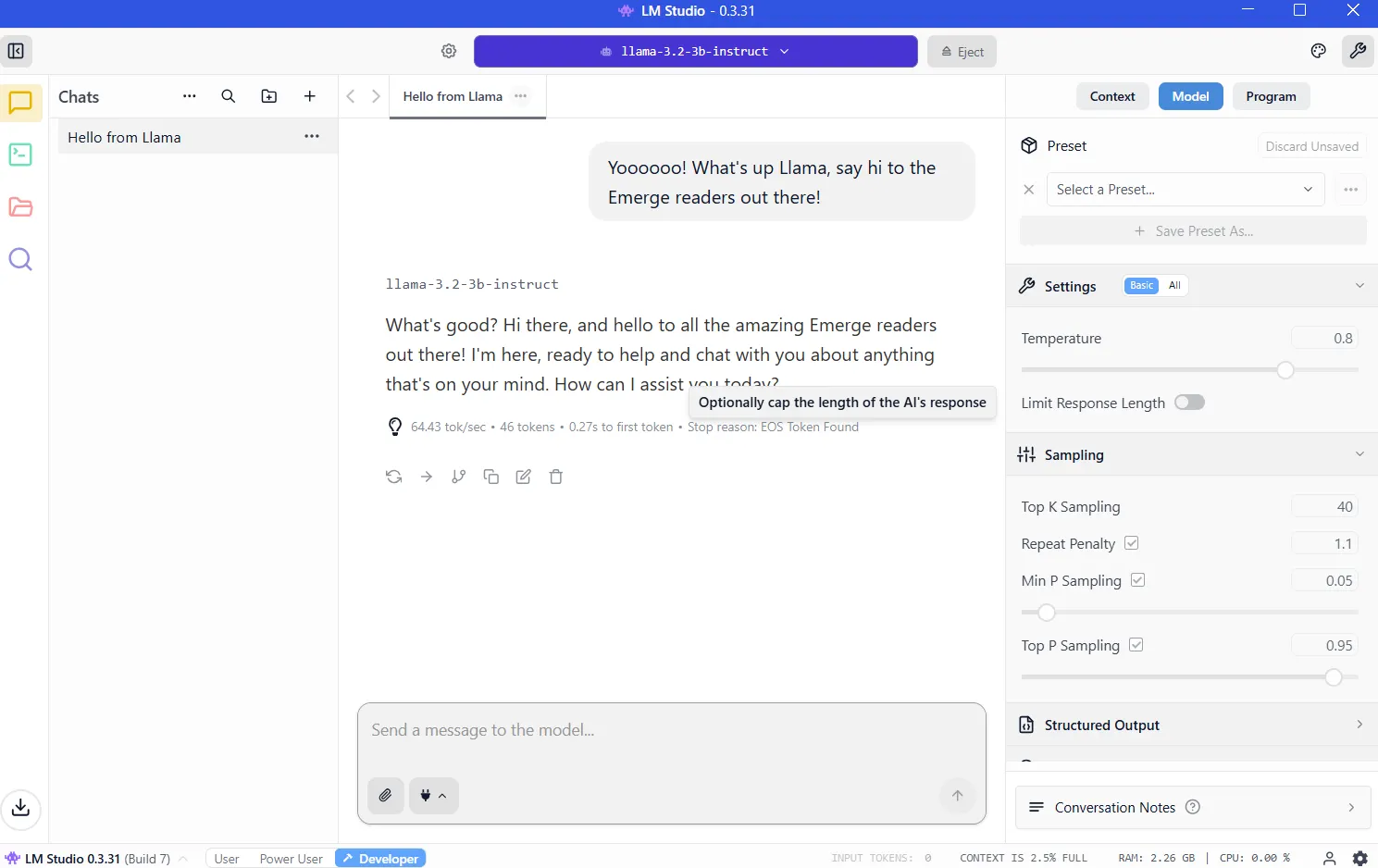

LM Studio wraps the entirety in a polished graphical interface. You can additionally simply gain the app, browse a constructed-in mannequin library, click on to set up, and inaugurate up chatting. The abilities mirrors the utilization of ChatGPT, other than the processing happens for your hardware. Windows, Mac, and Linux users get hold of the identical gentle abilities. For novices, this is the homely initiating point.

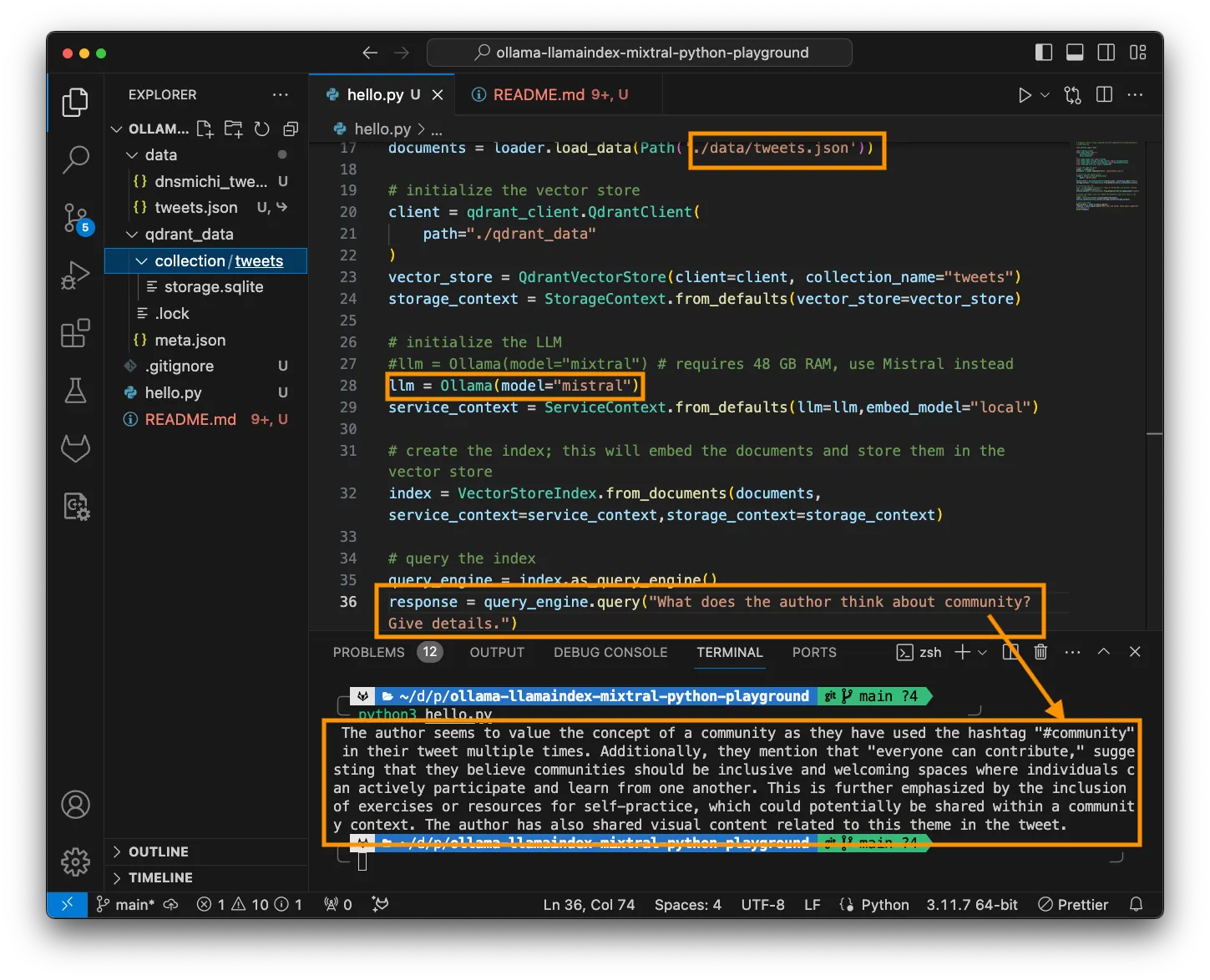

Ollama is geared toward developers and energy users who live within the terminal. Install by expose line, pull objects with a single expose, and then script or automate to your heart’s mutter. It be light-weight, quick, and integrates cleanly into programming workflows.

The learning curve is steeper, however the payoff is flexibility. It is additionally what energy users settle for versatility and customizability.

Each tools bustle the identical underlying objects the utilization of identical optimization engines. Performance differences are negligible.

Developing LM Studio

Visit https://lmstudio.ai/ and discover the installer for your working arrangement. The file weighs about 540MB. Dart the installer and observe the prompts. Launch the software.

Hint 1: If it asks you which of them form of particular person you are, decide “developer.” The assorted profiles simply camouflage alternatives to map issues less complicated.

Hint 2: This will likely imply downloading OSS, OpenAI’s inaugurate-supply AI mannequin. As an replacement, click on “skip” for now; there are better, smaller objects that will map a better job.

VRAM: The most essential to running local AI

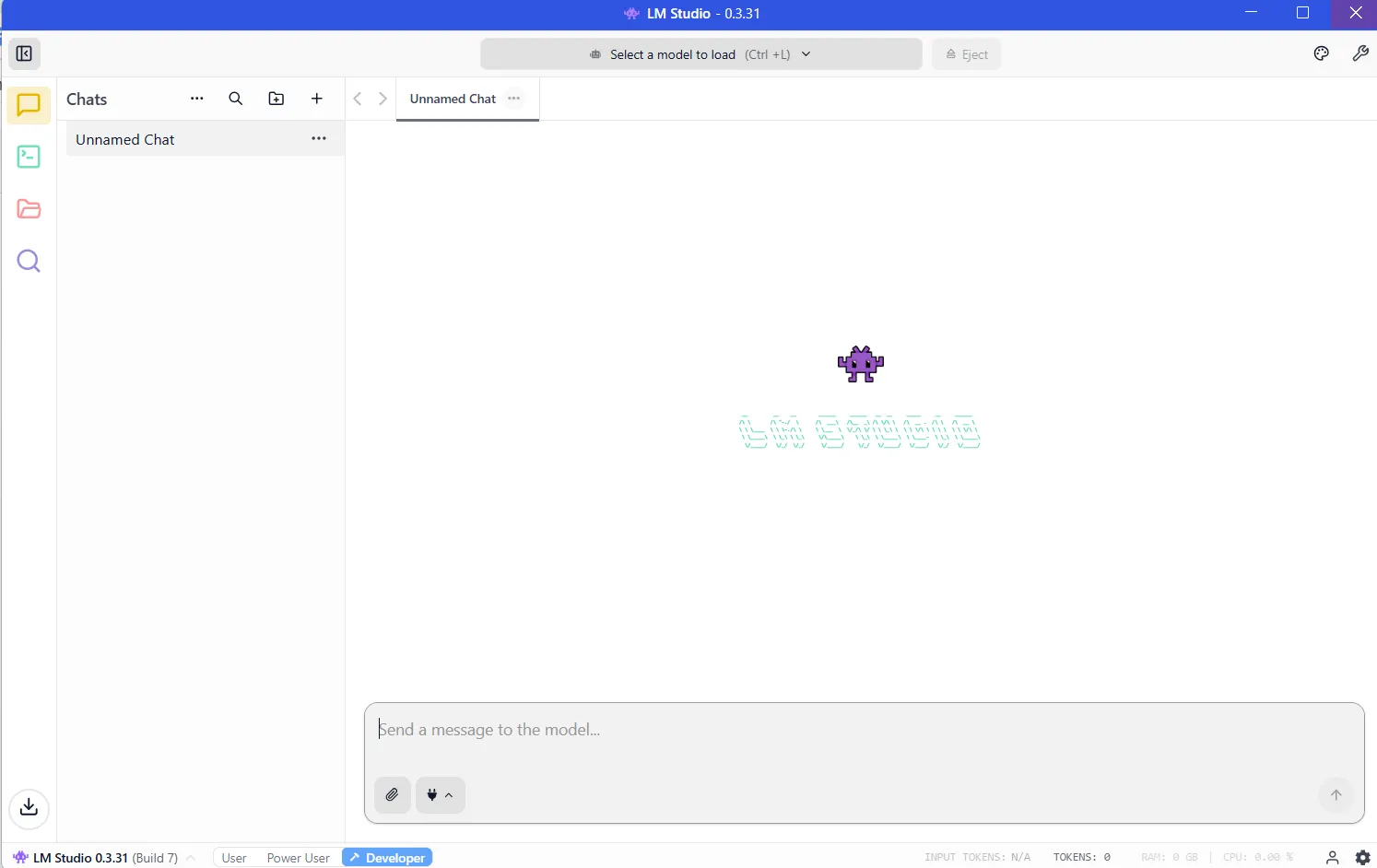

Whenever you are going to additionally own gotten put in LM Studio, the program will be ready to bustle and may per chance per chance well gape like this:

Now you are going to additionally own gotten to gain a mannequin sooner than your LLM will work. And the more extremely effective the mannequin, the more sources this may per chance increasingly require.

The serious resource is VRAM, or video memory for your graphics card. LLMs load into VRAM all the method thru inference. Must you originate now not own adequate dwelling, then efficiency collapses and the arrangement have to resort to slower arrangement RAM. You can desire to lead clear of that by having adequate VRAM for the mannequin that you can own to bustle.

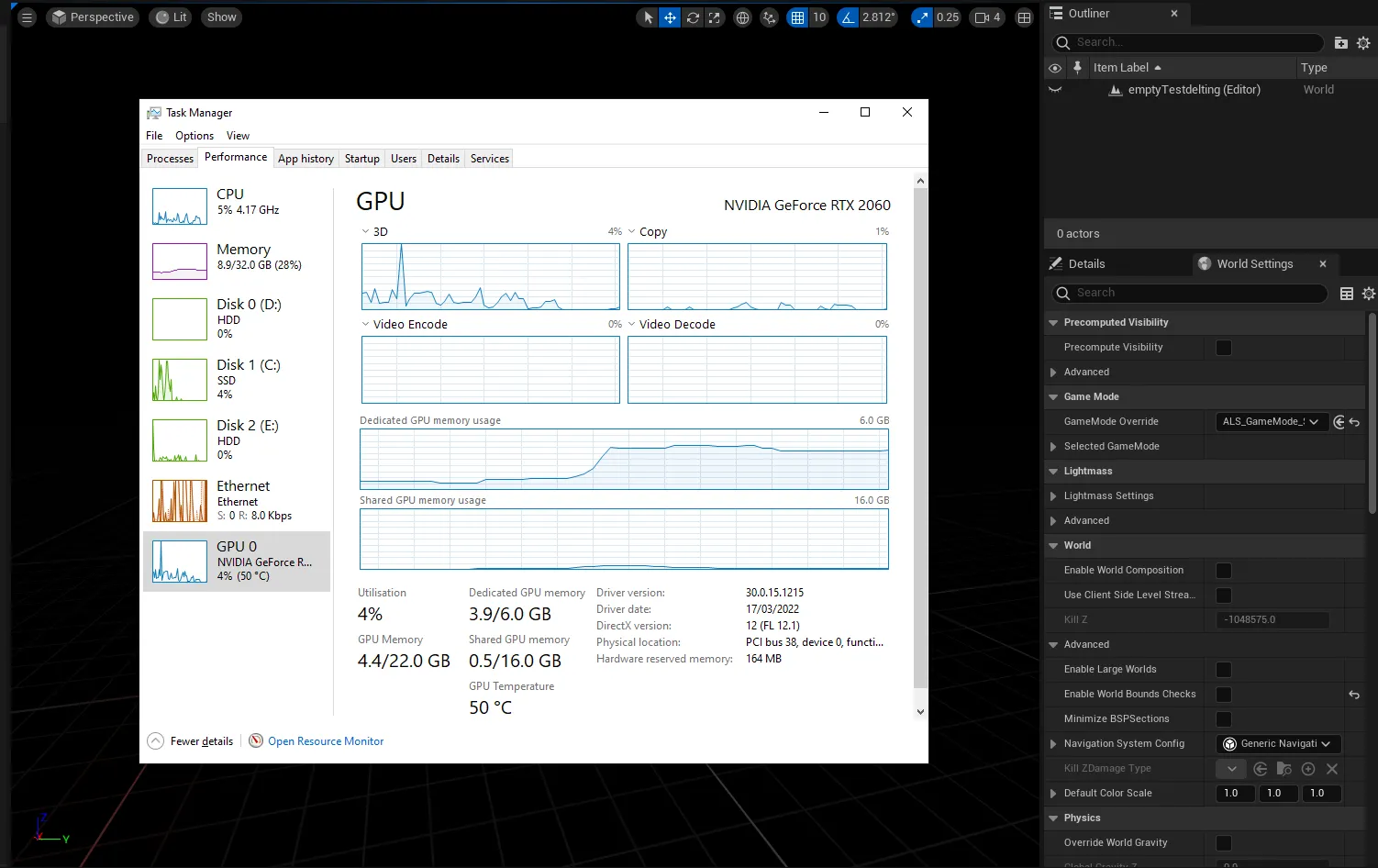

To understand how grand VRAM you are going to additionally own gotten, you may per chance well perhaps enter the Windows assignment manager (alter+alt+del) and click on on on the GPU tab, guaranteeing you are going to additionally own gotten chosen the devoted graphics card and now not the integrated graphics for your Intel/AMD processor.

You can stare how grand VRAM you are going to additionally own gotten within the “Dedicated GPU memory” fragment.

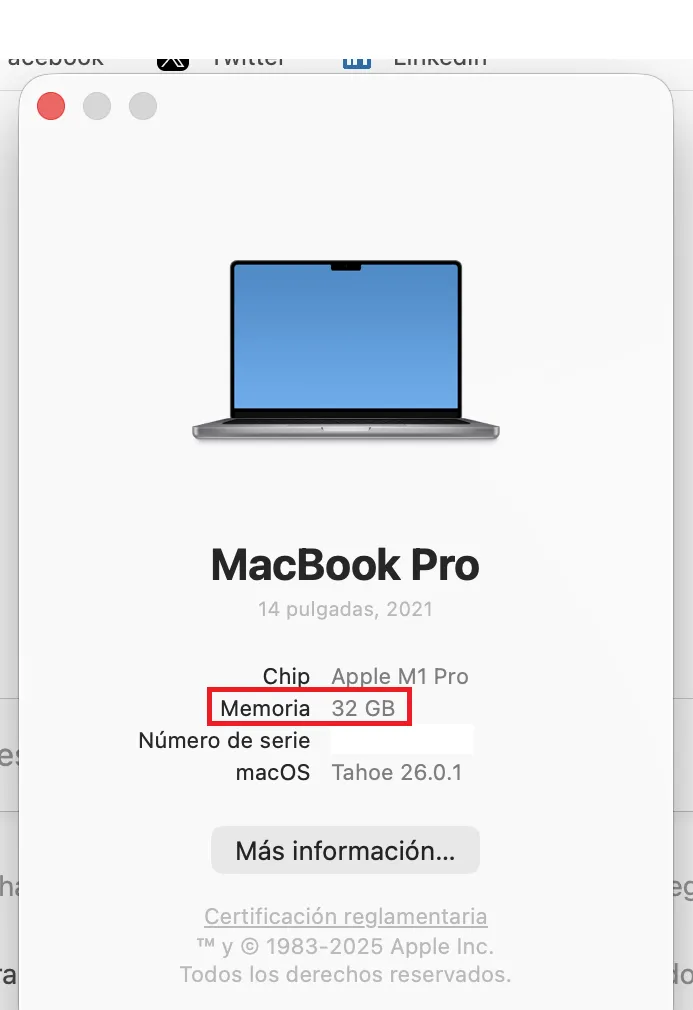

On M-Series Macs, issues are less complicated since they piece RAM and VRAM. The amount of RAM for your machine will equal the VRAM you may per chance well perhaps access.

To examine, click on on the Apple mark, then click on on “About.” Mediate Reminiscence? That is how grand VRAM you are going to additionally own gotten.

You’ll desire as a minimal 8GB of VRAM. Items within the 7-9 billion parameter range, compressed the utilization of 4-bit quantization, match very simply while turning in tough efficiency. You’ll know if a mannequin is quantized because developers in most cases repeat it within the title. Must you stare BF, FP or GGUF within the title, then you definately are having a gape at a quantized mannequin. The lower the number (FP32, FP16, FP8, FP4), the less sources this may per chance increasingly luxuriate in.

It’s now not apples to apples, however factor in quantization because the resolution of your display cloak. You can stare the identical image in 8K, 4K, 1080p, or 720p. You will be in a position to take the entirety no topic the resolution, however zooming in and being choosy on the minute print will repeat that a 4K image has more files that a 720p, however will require more memory and sources to render.

But ideally, if you happen to are actually serious, then you definately can own to aquire a nice gaming GPU with 24GB of VRAM. It doesn’t topic if it’s some distance fresh or now not, and it doesn’t topic how quick or extremely effective it’s some distance. Within the land of AI, VRAM is king.

Once how grand VRAM you may per chance well perhaps faucet, then you definately can determine which objects you may per chance well perhaps bustle by going to the VRAM Calculator. Or, simply inaugurate up with smaller objects of less than 4 billion parameters and then step as much as higher ones until your computer tells you that you don’t own adequate memory. (More on this system in a bit.)

Downloading your objects

Once your hardware’s limits, then it’s time to gain a mannequin. Click on the magnifying glass icon on the left sidebar and take a look at up on the mannequin by title.

Qwen and DeepSeek are factual objects to use to inaugurate up your dash. Certain, they’re Chinese language, however if you happen to are shrinking about being spied on, then you definately can relaxation straightforward. Must you bustle your LLM within the community, nothing leaves your machine, so you received’t be spied on by either the Chinese language, the U.S. authorities, or any corporate entities.

As for viruses, the entirety we’re recommending comes by Hugging Face, where instrument is straight away checked for spyware and spy ware and various malware. But for what it’s value, the glorious American mannequin is Meta’s Llama, so you are going to additionally desire to decide that if you happen to are a patriot. (We provide assorted recommendations within the final fragment.)

Trace that objects map behave in a different way reckoning on the coaching dataset and the luminous-tuning recommendations dilapidated to map them. Elon Musk’s Grok however, there is never this form of thing as a this form of thing as an self reliant mannequin because there is never this form of thing as a such thing as self reliant files. So decide your poison reckoning on how grand you care about geopolitics.

For now, gain each the 3B (smaller less succesful mannequin) and 7B versions. Must you may per chance well perhaps bustle the 7B, then delete the 3B (and take a gape at downloading and running the 13B version etc). Must you may per chance well perhaps’t bustle the 7B version, then delete it and use the 3B version.

Once downloaded, load the mannequin from the My Items fragment. The chat interface appears to be like. Form a message. The mannequin responds. Congratulations: You are running a local AI.

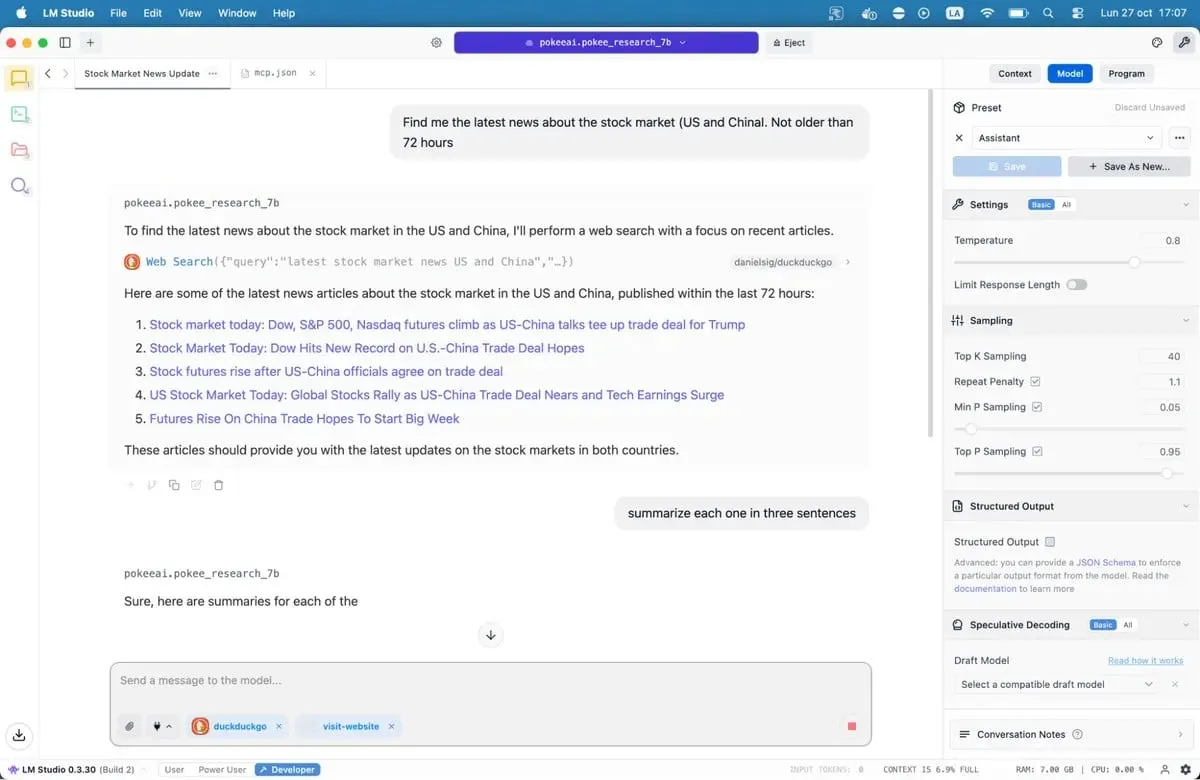

Giving your mannequin web access

Out of the field, local objects can now not browse the discover. They’re isolated by map, so you are going to iterate with them in accordance with their internal files. They are going to work luminous for writing rapid tales, answering questions, doing some coding, etc. But they received’t come up with the most original records, expose you the weather, verify your email, or agenda meetings for you.

Model Context Protocol servers alternate this.

MCP servers act as bridges between your mannequin and external services. Favor your AI to search Google, verify GitHub repositories, or learn web sites? MCP servers map it imaginable. LM Studio added MCP beef up in version 0.3.17, accessible thru the Program tab. Every server exposes specific tools—web search, file access, API calls.

Must you desire to provide objects access to the discover, then our full handbook to MCP servers walks thru the setup activity, at the side of standard alternatives like web search and database access.

Attach the file and LM Studio will automatically load the servers. Must you chat with your mannequin, it could per chance well now name these tools to discover live records. Your local AI actual gained superpowers.

Our suggested objects for 8GB systems

There are actually a full bunch of LLMs accessible for you, from jack-of-all-trades alternatives to luminous-tuned objects designed for actually just actual use instances like coding, medication, position play or artistic writing.

Ideal for coding: Nemotron or DeepSeek are factual. They received’t blow your mind, however will work luminous with code generation and debugging, outperforming most picks in programming benchmarks. DeepSeek-Coder-V2 6.7B presents some other solid option, seriously for multilingual pattern.

Ideal for overall files and reasoning: Qwen3 8B. The mannequin has tough mathematical capabilities and handles advanced queries effectively. Its context window accommodates longer paperwork with out shedding coherence.

Ideal for artistic writing: DeepSeek R1 variants, however you wish some heavy instructed engineering. There are additionally uncensored luminous-tunes like the “abliterated-uncensored-NEO-Imatrix” version of OpenAI’s GPT-OSS, which is factual for dread; or Soiled-Muse-Author, which is factual for erotica (so that they inform).

Ideal for chatbots, position-taking half in, interactive fiction, customer carrier: Mistral 7B (seriously Undi95 DPO Mistral 7B) and Llama variants with large context dwelling windows. MythoMax L2 13B maintains character traits all over lengthy conversations and adapts tone naturally. For assorted NSFW position-play, there are varied alternatives. It is mainly handy to set up one of the necessary crucial objects on this list.

For MCP: Jan-v1-4b and Pokee Research 7b are nice objects in repeat so that you can attempt one thing fresh. DeepSeek R1 is some other factual option.

The entire objects can even be downloaded straight from LM Studio if you happen to actual take a look at up on their names.

Trace that the inaugurate-supply LLM landscape is shifting quick. Current objects birth weekly, each claiming improvements. You can additionally verify them out in LM Studio, or browse thru the assorted repositories on Hugging Face. Take a look at alternatives out for yourself. Infamous suits grow to be apparent hasty, thanks to awkward phrasing, repetitive patterns, and actual errors. Lawful objects actually feel assorted. They reason. They surprise you.

The abilities works. The instrument is consuming. Your computer perhaps already has adequate energy. All that is left is attempting it.